Metaphors Be With You

Preopening

Metaphors Be With You: A Tireless Work On Play On Words

Copyright © 2015-2020 Richard Madsen Neff

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with the Invariant Sections being “Preopening” and “Postclosing”, with the Front-Cover Texts being “Metaphors Be With You: A Tireless Work On Play On Words”, and no Back-Cover Texts.

A link to the license is in the “Postclosing” section.

Dedication

- To my Dad

- for inspiring me to get ’er done.

- To my students

- for being the reason for doing this.

(

Groucho Marx once quipped, “Outside of a dog, a book is a man’s best friend. Inside of a dog, it’s too dark to read.” This book is not about that kind of word play. (These are not the droids you’re looking for. Move on.) But it will have something to say about Outside versus Inside in a logical context later on.

Kith and kin to word play is the idea of transformation, new from old. Old is known, new unknown. The unknown is daunting unless and until it becomes known. And the becoming process is called learning, a transformation from an old state of (less) knowledge to a new state of (more) knowledge.

Topics To Be Explored

The field of knowledge called discrete mathematics is HUGE — and no wonder; it is like a catch-all net. Anything not contemplated by mathematics of the continuous variety (calculus and company) is considered discrete (but not discreet). So narrowing the scope is a challenge of near epic proportions — met by allowing the following topic pairs to serve as guideposts as we venture out into this vast field.

- Sets and Logic

- Functions and Relations

- Combinatorics and Probability

- Number Theory and Practice

- Trees and Graphs

- Languages and Grammars

Functions (which build on sets (which build on logic)) embody transformations. These are natural, foundational topics in a study of discrete mathematics. And to provide a transformative learning experience, functional programming (the oft-neglected (by most students) odd duck of the programming world) will be a particular focus of this work.

There are two main reasons to gain experience with the functional paradigm. One, functional programming rounds out the dyad of procedural and object-oriented programming, presumably already in the typical programmer’s toolbox. Two, learning functional programming is a natural way to learn discrete mathematics, especially since functions are a key aspect, and illuminate many other aspects of discrete mathematics.

For reasons revealed à la Isaiah 28:10, the language of choice for learning functional programming will be the lisp programming language, specifically the Emacs lisp (elisp) dialect. Why lisp, certainly, but especially why elisp? To forestall unbearable suspense, let’s dispense with the knee-jerk objection that elisp is not a pure functional programming language. With discipline it can be used as such, and the effort will be instructive.

| Procedural | Object-Oriented | Functional |

|---|---|---|

| Variable values vary. | Same as Procedural. | Variable values never change once assigned. |

| Collections are mutable. | Same as Procedural. | Collections are immutable. |

| Code is stateful (function calls can have side effects). | Same as Procedural. | Code is stateless (function calls can be replaced by their return values without changing program behavior). |

| Functions are partial (not defined on all inputs, so exceptions can be thrown). | Same as Procedural. | Functions are total (defined on all inputs, so exceptions are not thrown, and need not be handled.) |

The above table fails to capture all the distinctions in this three-way comparison, but one thing is obvious. When looked at through the functional lens, procedural and object-oriented are the same. What then distinguishes them? The main difference is in the types of the variables.

Object-oriented adds user-defined types to the standard primitives and composites of procedural.

Procedural and object-oriented also differ on how they treat nouns and verbs. It gets pretty topsy-turvy when normal everyday distinctions between nouns (things) and verbs (actions) are blurred.

When nouns get verbed and verbs get nouned, it’s double word play!

DIZ

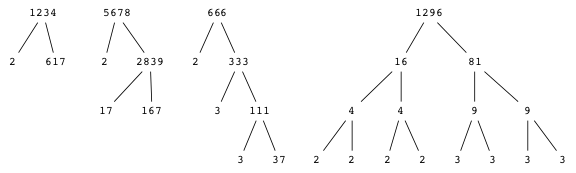

Speaking of double, combinatorics, the study of combining, or counting, and number theory, the study of integers (useful for counting — especially the positive ones) are an integral ☺ part of discrete math. How many ways are there of combining things in various combinations? Variations, arrangements, shufflings — these are things that must be accounted for in the kind of careful counting that is foundational for discrete probability theory. But they also come into play in modern practices of number theory like cryptology, the study of secret messages.

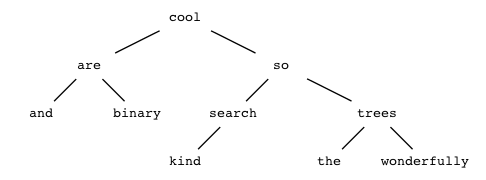

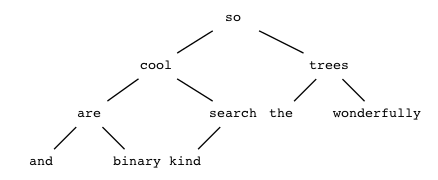

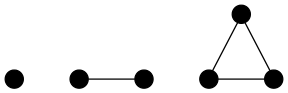

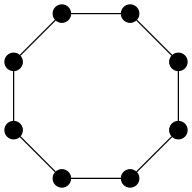

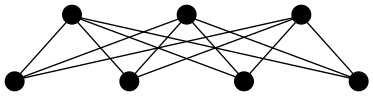

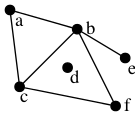

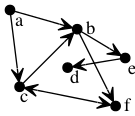

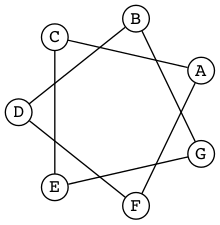

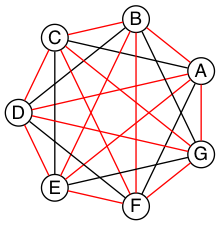

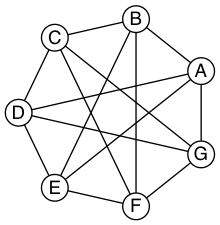

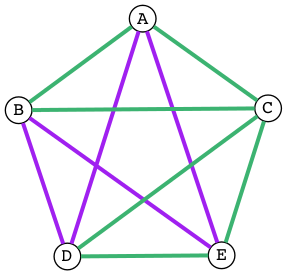

Double encore: two more discrete structures, with a cornucopia of applications, are trees and their generalization, graphs. Trees and graphs are two of the most versatile and useful data structures ever invented, and will be explored in that light.

Teaser begin, a binary tree — perhaps the type easiest to understand — can represent any conceptual (as opposed to botanical) tree whatsoever. How to do so takes us into deep representational waters, but we’ll proceed carefully. And because graphs can represent relations, listed above in conjunction with functions, they truly are rock solid stars of math and computer science. The relationship between functions and relations is like that of trees and graphs, and when understood leads to starry-eyed wonder, end teaser.

DCP

CDF

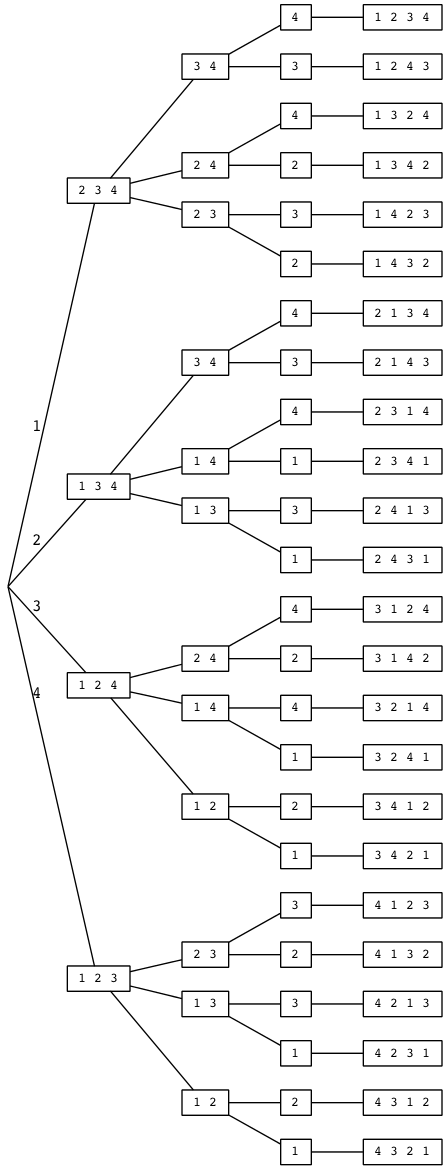

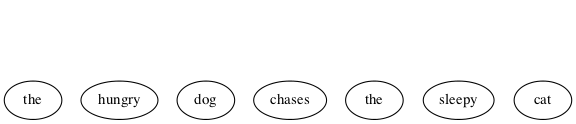

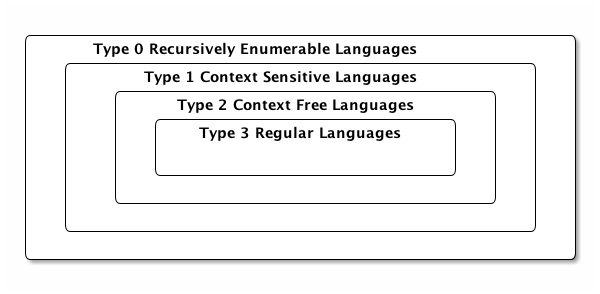

Last double: finishing off and rounding out this relatively small set of topics is the dynamic duo, languages and grammars. Language is being used, rather prosaically, as a vehicle for communication, even as I write these words, and you read them. There is fascinating scientific evidence for the facility of language being innate, inborn, a human instinct. Steven Pinker, who literally wrote the book on this, calls language a “discrete combinatorial system”, which is why it makes total sense that it be in this book and beyond.

And what is grammar? Pinker in his book is quick to point out what it is not. Nags such as “Never end a sentence with a preposition,” “Don’t dangle participles,” and (my personal favorite) “To ever split an infinitive — anathema!” Such as these are what you may have learned in ‘grammar school’ as teachers tried their best to drill into you how to use good English. No doubt my English teachers would cringe at reading the last phrase of the last sentence of the last paragraph, where “it” is used in two different ways, and “this book” can ambiguously refer to more than one referent — think about it.

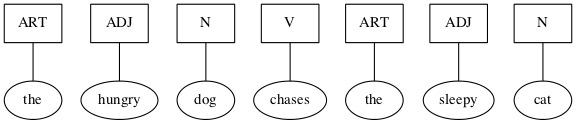

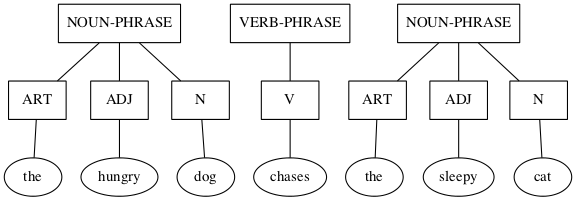

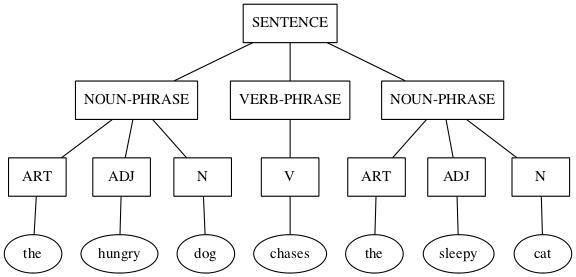

No, in the context of discrete mathematics, grammar has nothing to do with proper English usage. Instead, a grammar is a generator of the discrete combinations of a language system. These generated combinations are called phrase structures (as opposed to word structures), hence, in this sense grammars are better known as phrase structure grammars. But we’re getting ahead of ourselves — PSG ISA TLA TBE l8r.

Autobiographical Aside

I am writing this book for many reasons, which mostly relate to the following language-ish math-ish relationships and transformations: Words have Letters, Numbers have Digits, Letters map to Digits, hence Words map to Numbers.

I love words. I love numbers. Does that mean English was my first choice of discipline, Math second? No! English is my native tongue, but Math to me feels pre-native, or the language I came into this world furnished with. Not that I lay claim to being a mathematical prodigy like Karl Friedrich Gauss, John Von Neumann, or any extraordinary human like that. Not even close!

A first recollection of my inchoate interest in math is from third grade. There I was, standing at the blackboard, trying to figure out multiplication. The process was mysterious to me, even with my teacher right there patiently explaining it. But her patience paid off, and when I “got it” the thrill was electrifying! Armed with a memorized times table, I could wield the power of this newly acquired understanding. Much later in life came the realization that I could immensely enjoy helping others acquire understanding and thus wield power!

Back to words. As I said, I love them. I love the way words work. I love the way they look. I love the way they sound. Part of this stems from my love of music, especially vocal music. Singers pay attention to nuances of pronunciation, diphthongs being but one example. Take another example. The rule for pronouncing the e in the. Is it ee or uh? It depends not on the look of the next word but its sound. The honorable wants ee not uh because the aitch is silent. The Eternal, but the One — ee, uh. Because one is actually won.

The attentive reader may have noticed that, among other infelicities, the preceding paragraph plays fast and loose with use versus mention. There is a distinction to be made between the use of a word, and the mention of a word.

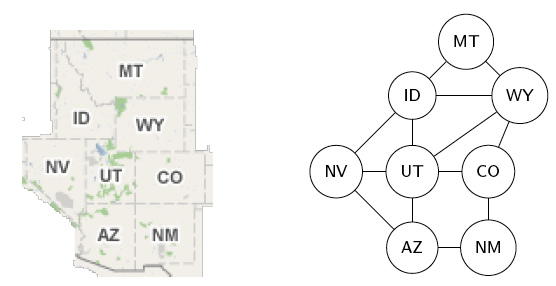

For example, the word Idaho:

Idaho has mountains. (A use of the word.)

‘Idaho’ has five letters. (A mention of the word.)

This distinction will come to the fore when expressions in a programming language like lisp must be precisely formed and evaluated. The quote marks are all-important. Small marks, big effect.

Analogously, what importance does a simple preposition have? How about the crucial difference between being saved in your sins versus being saved from your sins! Small words, enormous effect.

CRJ

I have a well-nigh fanatical fondness for the English alphabet with its 26 letters. 26 is 2 times 13. 2 and 13 are both prime, which is cool enough, but they’re also my birth month and day. And it’s unambiguous which is which. 2/13 or 13/2 — take your pick. Because there’s no thirteenth month, either way works. Not every birth date is so lucky! Not every language’s alphabet is so easy, either.

Despite the coolness of twice a baker’s dozen, it is deficient by one of being an even cooler multiple of three, and that’s a state of affairs we’ll not long tolerate. But which non-alphabetic (and non-numeric) symbol is best pressed into service to bring the number to three cubed?

Twenty-seven is three times nine. Nine give or take three is the number of main topics. Three chapters (not counting opening and closing bookends), with each chapter having at least three sections, each section having at least three subsections — perhaps bottoming out at this level — this seems to me to be a pretty good organization.

Slice it. Dice it. No matter how you whatever ice it — notice it’s not ice (but is it water — whatever hve u dehydrated?!) — ‘alphabet’ is one letter shy of being a concatenation (smooshing together) of the first two letters of some other (which?) alphabet. So correct its shyness (and yours). Say alphabeta. Go ahead, say it! Now say ALP HAB ETA (each is pronounceable) — but don’t expect anyone to understand what you just said!

TOP is the TLA

The Organizing Principle I have chosen for this book is the Three-Letter Acronym.

Why? Well, it fully fascinates me that “TLA ISA” is a perfectly grammatical sentence if interpreted just so. It expresses concisely the truth: ‘TLA’ “self-describes” (which is another intriguing saying).

But TLA can also stand for Three-Letter Abbreviation, of which there are many,

(JAN FEB MAR APR MAY JUN JUL AUG SEP OCT NOV DEC) being but one example.

Replacing the ‘A’ in TLA with a ‘W’ gives TLW, an acronym for Three-Letter

Word — (TOP TOE TON TOT TOY) for a butcher’s half-dozen of which.

Note that we could generate this list by simply prefixing TO to each letter

in PENTY, a carefully chosen way to code-say Christmas.

Note too that neither this list of five TLWs nor the first list of twelve TLAs has commas, those typical list item separators. The comma-free rendering is deliberate and significant, as will be seen.

Back to playing with words. If you can eat your words, shouldn’t you be able to smell and taste them too?! Or play with words like you play with your food? (These are rhetorical questions — don’t try to answer them!)

Scrambled EGGS — (GSGE GESG SGEG ...)

Can all 12 (not 24! (which is not to say 24 factorial (which equals 620448401733239439360000, IYI))) arrangements be put into one tetragramamalgam? What’s a tetragramamalgam? (These are not rhetorical questions — do try to answer them!)

So there you have it. An assorted set of words and “words” to guide discovery. Many to unpack meaning from. If the metaphor is a good one, that meaning gets packed into words, (or encodings thereof (another layer of packing material)), then unpacking can result in rich interconnected structures that strengthen learning, that make it stick in the brain. And that that permanently (let it be so!) stuck learning will serve you well is my high hope.

ONE

He awoke with a start. What time was it? … Did his alarm not go off? … No, it was 3:45, he just woke up too early. He lay there, mind racing, trying to figure out what had woken him up. His wife was breathing deeply, she was sound asleep, so it wasn’t her stirring. No … it was that dream. That awesome, awefull dream where he was outside staring into the clear night sky, basking in “the icy air of night” when suddenly he felt a very real vertigo, as if he were falling up into that endless star-sprinkled void.

Falling up. A contradiction in terms. Yes, but one to be embraced, not shunned, because it gives one an EDGE. No, this is the title of a book. Yes, that treasured book he had acquired years ago, and still enjoyed. Clever poems and cute drawings. It was because the author was so playful with words and the thoughts they triggered that he liked it, and his other book, what was it? Where The Sidewalk Ends. Yes, well, enough of that. He would have to get up soon. Today he would meet his two new tutees. TWOtees for short. A male and a female had seen his ad and responded. Was he expecting more than two to? Yes … but two would be fine. Two would be enough to keep him busy.

ONF

“What I’d like to do today is just make introductions and see where we’re at,” he said. “My name is Tiberius Ishmael Luxor, but you can call me Til. T-I-L, my initials, my preference.” While escorting his two visitors into his study, his mind wandered, as it always did when he introduced himself. Tiberius, why had his parents, fervent fans of Captain Kirk and all things Star Trek, named him that? And Ishmael? Was Moby Dick also a source of inspiration? Or the Bible? He had never asked them, to his regret. Luxor, of course, was not a name they had chosen. He rather liked his family name. The first three letters, anyway. Latin for light. He often played with Luxor by asking himself, Light or what? Darkness? Heavy? Going with the other meaning of light seemed frivolous.

“Uh, my name’s Atticus. Atticus Bernhold Ushnol,” his male visitor said, noticing that Til looked distracted. “What do you prefer we call you?” asked Til, jerking himself back to full attention. “Well, I like Abu, if that’s okay with you!”

Til smiled. A man after his own heart. “How acronymenamored of you!” he said.

“Is that even a word?” asked his female guest, addressing Til. Feeling just a little annoyed, she turned to Abu and said “You really want us to call you the name of the monkey in Aladdin?” Abu smiled, a crooked grin. “Well, I wouldn’t know about that — I never saw Aladdin.”

“How could you not have seen Aladdin? What planet are you from?” she said, completely incredulous.

“Hold on a minute,” said Til. “Let’s discuss the finer points of names and origins after you introduce yourself.”

“Ila. My middle name is my maiden name, Bowen. My last name is my married name, Toopik, which I suppose I’m stuck with.” Ila always felt irritated that marriage traditionally involved changing the woman’s name, not the man’s. “And yes, it’s Eee-la, not Eye-la. And I especially prefer you don’t call me Ibt, if you get my drift!”

Til laughed. Abu was still grinning but said nothing, so Til went on. “We need variety, so Ila is fine. Not everyone needs to be acronymized!”

ONG

Later that day as Til reflected on their first meeting, he saw himself really enjoying the teacher-learner interactions to come. His ad had had the intended effect. Abu and Ila were motivated learners, albeit for different reasons. He was somewhat surprised that not more interest had been generated. But he knew it was unlikely up front. So two it would be. Two is a good number. Three is even better (odd better?) if he counted himself as a learner. Which he always did. Learning and teaching were intricately intertwined in his mind. Teaching was like breathing, and both teaching and learning were exhilarating to Til.

His wife interrupted his reverie. “Til, dear, what did you learn today from your first meeting with — what did you call them — Abu and Ila?”

“Interesting things,” said Til. “Abu is the general manager at a landscaping company (I think he said nursery as well). Ila works for some web design company (I forget the name) doing programming. She wants to learn discrete math because she’s been told it will make her a better programmer!”

“However, Abu’s not a programmer, and not really all that techy, I gathered, but says he loves learning, practical or not. I predict this may put him at odds at times with Ila, who said she only wants practical learning. I told her Euclid would mock that attitude, and she was surprised. But she agreed to look into that. She didn’t know who Euclid was, if you can imagine!” Til winked his eye, and his wife rolled hers.

ONH

“Anyway, Abu’s pretty laid back, so her barbs won’t bother him — much I hope,” he said.

“Are they married, any kids?” she said.

“Abu is single, but would like to be married.” Til rubbed his chin. “Ila is married, but she didn’t mention her husband’s name, or really anything about him, except that he’s not a nerd like her. Before they even warmed up to my affinity for acronyms, Ila confessed to being a DINK, and Abu immediately responded, ‘I guess that makes me a SINKNEM!’ So he’s pretty quick on the uptake.”

“That’s good!” she said. “So Ila’s husband is not nerdy like her, but …?”

“But she also didn’t say what he does,” he said. “She taught herself programming, how cool is that?!”

“Very cool, Mr. Luxor,” she said affectionately, gently rubbing his shoulders.

“Mrs. Luxor, I’ll give you 20 minutes to stop that!” he said.

“What are their interests other than discrete math?” she said, ignoring his pathetic ploy.

“Well, let’s see, Abu rattled off his other interests as flowers, stars and poetry!” he said. “And Ila likes sports and the outdoors. Hopefully that includes stars!”

“That would be great if you all have stars in common,” she said.

ONI

Til nodded, but his eyes unfocused as his thoughts started to wander. His wife withdrew, wordlessly worrying that Til was thinking about sharing his dream about falling up into the stars with Abu and Ila. That never seemed to go well. But Til was remembering the exchange he had with Abu, who lingered a few minutes after Ila left.

He had said, “Abu, since you like flowers, you must be okay with the STEMless reputation they have!” Without waiting for him to answer he went on, “Since I gave Ila an invitation to learn something about Euclid, I invite you to look up what Richard Feynman said about flowers and science, specifically whether knowing the science made him appreciate the flowers more or less?”

Well, enough musing for now. His two tutees were eager and grateful, and not just because they thought the fee he had set was reasonable. Little did they know the price of knowledge he would exact of them.

CRC

This is the first of many exercises/problems/puzzles to come, always displayed

in this type of yellow-background box.

This is the first of many exercises/problems/puzzles to come, always displayed

in this type of yellow-background box.

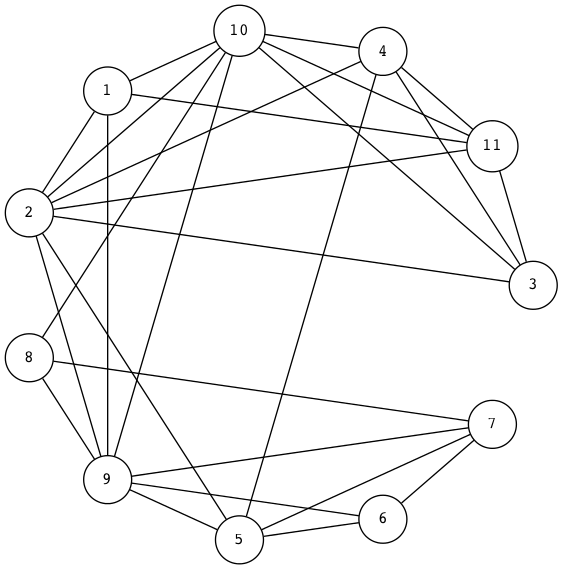

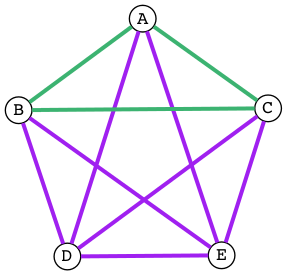

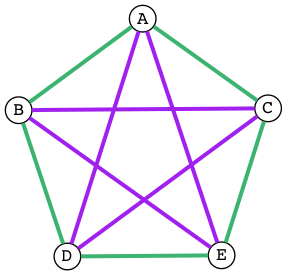

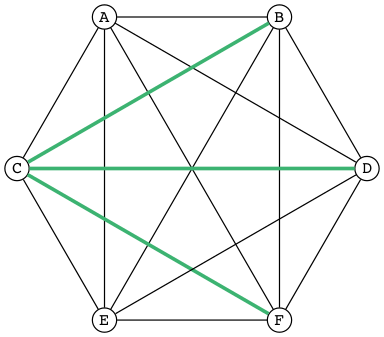

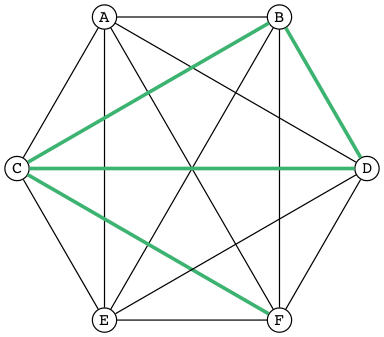

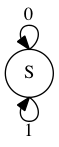

Propose a connection between discrete mathematics and the image above. (This is a “free association” exercise — there is no right answer.)

DGZ

The operation called dehydration takes a word and deletes all letters in it from H to O. What is the original (rehydrated) text of this dehydrated sequence of words?

TE TE AS CE TE WARUS SAD T TA F AY TGS F SES AD SPS AD SEAG WAX F CABBAGES AD GS AD WY TE SEA S BG T AD WETER PGS AVE WGS

CHJ

Let \(n / 0\) be infinity, for any positive integer n.

What English word has the largest finite consonant-vowel-ratio (CVR)? For example, the CVRs of the words (including the TLA) in the previous sentence are 3:1, 5:2, 3:1, 2:1, 2:1, 5:2, 1:1, 2:1, 3:2, 2:3, and infinity (3:0).

DOK

Pick a number (a positive integer). Form the product of that number, one more than that number, and one more than twice that number. Repeat for several different numbers. There is a pattern to these products. What is it?

CJF

Numbers and words. Cycles and patterns.

Do you think mathematicians discover or invent patterns? While pondering that question, consider what G. H. Hardy famously wrote: A mathematician, like a painter or a poet, is a maker of patterns. If his patterns are more permanent than theirs, it is because they are made with ideas. What do you think he meant by that?

Here’s a puzzle superficially just involving a 6-digit number and a 6-letter “word”. What does

654321

have to do with

CLAHCK

other than they both follow the “length 6” pattern?

DZB

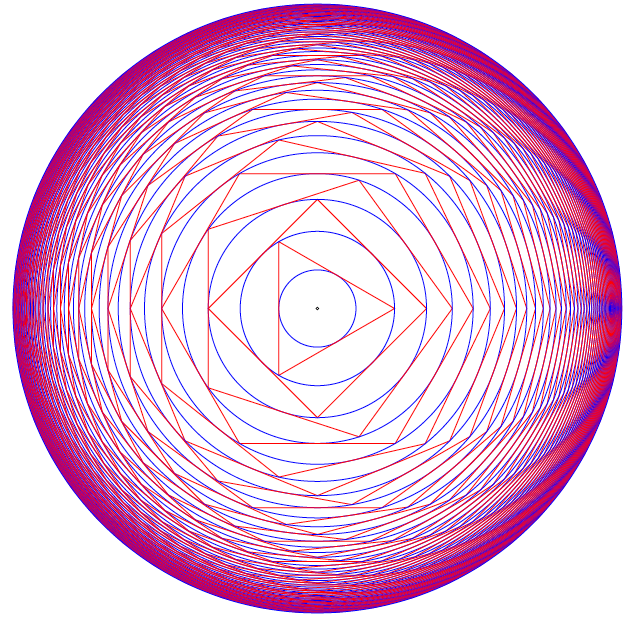

In the figure below, the innermost circle has radius 1. It is circumscribed by an equilateral triangle, which is circumscribed by a circle, which is circumscribed by a square, which is circumscribed by yet another circle, and so forth.

What is the radius of the outermost circle?

ABC

Always Begin Carefully with Sets and Logic.

When you read you begin with A, B, C; when you sing you begin with Do, Re, Mi; when you count you begin with 1, 2, 3 — or is it 0, 1, 2?! Mathematicians do both, and have logical reasons for each. Computer scientists mainly do zero-based counting, as coaxed to do by both hardware and software. 0-1-2; ready-set-goo!

So let’s begin with the set of positive integers, also known as the counting numbers — except, wait. How do you count the number of elements in the set with no elements, AKA the empty set? You need zero, which for centuries was not even considered a number. Lucky for us, these days zero is a full-fledged member of the set of natural numbers, another name for the counting numbers. But some still see zero as UNnatural and thus exclude it from the set. Right away, ambiguity rears its hoary head, and definitions must needs be clarified before crafting arguments that depend on whether zero is in or out.

A set is just a collection of objects, where objects are individual, discrete, separate things that can be named or identified somehow.

An object belonging to a set is called a member or an element of the set. In symbols, for a set S and object x:

x ∈ S

is the statement “ x is an element (or member) of S ” — or shorter, “ x is in S ”. For non-member y, the statement “ y is not in S ” is symbolized as

y ∉ S.

ABD

A collection that serves the mathematical idea of a set is an unordered one — the elements can be listed in any order and it does not change the set.

Speaking of lists, LISP originally stood for LISt Processor (or Processing), so let’s list some simple sets in lisp style, and show a side-by-side comparison with math style:

| Lisp Style | Math Style |

|---|---|

| () or nil | { } or ∅ |

| (A B C) | {A, B, C} |

| (Do Re Mi) | {Do, Re, Mi} |

| (1 2 3) | {1, 2, 3} |

| (0 1 2) | {0, 1, 2, …} |

Other than dispensing with commas and replacing curly braces with parentheses, lisp is just like math — except, wait. What are those three dots saying? In math, the ellipsis (…) is short for “and so on” which means “continue this way forever”. Since lisp is a computer programming language, where doing things forever is a bad idea, we truncated the set named \(\mathbb{N}\) after listing its first three members.

ABE

Also, no duplicates are allowed in sets. This requirement guarantees element uniqueness, a desirable attribute strangely yoked with element unorderliness, the other main (anti-)constraint. Here are three lispy sets (really lists):

(A B C) (A A B C) (A A A B C C)

Here are three more:

(B A C) (C A B) (B C A)

But by definition these are all the same set — (A B C) — so let’s just

make it a rule to quash duplicates when listing sets. And since order is

implied by the normal left-to-right listing order, we have a contradictory

situation where order is implied yet irrelevant. That really implies (linked)

lists are not the ideal data structure for representing sets, and (linkless)

vectors in elisp are not much better — they are simply indexable arrays of

(heterogeneous or homogeneous) elements — but let’s just say — stipulate

— that our rule also prefers vectors to lists. So the rule transforms a

mathy set like {A, C, C, C, B} into a lisp list (A C B) and thence to the

vector [A C B], which is now in a form that can be evaluated by the lisp

interpreter built into emacs.

An expression expresses some value in some syntactic form, and to evaluate means to reduce an expression to its value.

Evaluating an expression is typically done in order to hand it off to some further processing step.

OQP

The way to deal with lisp expressions is to look for lists, and when found,

evaluate them. Finding them is easy — lisp code consists of lists, and lists

of lists nested to many levels. Evaluating a list entails evaluating each of

its elements, except the first, which is treated differently. For now, think

of the first element as an operator that operates on the values of the rest of

the elements. These values are called the operands. So (+ 1 2 3 4) adds

together operands 1, 2, 3, and 4 to produce 10. Note the economy of this

prefix notation, as it’s called: only one mention of the + sign is needed.

The more familiar infix notation requires redundant + signs, as in

1+2+3+4.

Now is the time to overcome inertia and gain some momentum with elisp. Find at this link a mini-primer and work through it in emacs.

USV

Or pyrire jvgu ahzoref. Svaq n jnl gb vafreg vagb gurfr gra yvarf gur sbhe fgnaqneq zngu bcrengbef (+, -, \gvzrf, \qvi), be ! (snpgbevny), be \enqvp (fdhner ebbg), naq cneragurfrf sbe tebhcvat, gb znxr gra qvssrerag rkcerffvbaf gung rnpu rinyhngr gb 6. Sbe rknzcyr: \enqvp4 + \enqvp4 + \enqvp4 = 6.

| 0 | 0 | 0 | = | 6 | |||

| 1 | 1 | 1 | = | 6 | |||

| 2 | 2 | 2 | = | 6 | |||

| 3 | 3 | 3 | = | 6 | |||

| 4 | 4 | 4 | = | 6 | |||

| 5 | 5 | 5 | = | 6 | |||

| 6 | 6 | 6 | = | 6 | |||

| 7 | 7 | 7 | = | 6 | |||

| 8 | 8 | 8 | = | 6 | |||

| 9 | 9 | 9 | = | 6 | |||

ABF

We next turn our attention to a way to view statements about sets as

propositions with Boolean values (true or false). Recall that in lisp, true

is abbreviated t, and false nil. If you like, with some help from a pair

of global variables, you can be traditional and just use true and false.

A proposition is a sentence (or statement) that is either true or false. The sentence can be about anything at all (not just sets), as long as ascertaining its truth or falsity is feasible. The study called propositional logic deals with standalone and compound propositions, which are propositions composed of other propositions, standalone or themselves composite.

How to compose or combine propositions to create these compounds is coming after a few exercises in model building, starting with simple propositions.

A proposition: Man is mortal.

Another proposition: The sky is up.

A proposition about sets: The set of letters in the English Alphabet can be split into the set of vowels and the set of consonants.

A proposition about numbers: The square of a number is more than double that number.

One of the above propositions is false. Which one?

A non-proposition: Go to the back of the boat.

Another non-proposition: Why bother?

Building mental models requires raw materials — thought stuff — and the knowhow to arrange this stuff usefully. Both come more easily and more plentifully as you do exercises and work through problems.

UCA

Start your exercise warmup by thinking up three examples and three non-examples of propositions. Make them pithy.

ODS

Which of the following sentences are propositions? For each proposition, give its truth value (true or false).

- 2 + 2 = 4.

- 2 + 1 = 4.

- Toronto is the capital of Germany.

- Read these questions carefully.

- x + y + z = q.

- What time is it?

- 2x + 3 = 9.

- Simon says jump.

UGX

Put the proposition “ v is a member of the set of English alphabet consonants” in symbolic logic terms, using C as the name of the set of consonants.

OGR

Which of the following are simple (not compound) propositions?

- Weeks are shorter than months.

- Days are longer than hours and minutes.

- Hours are longer than minutes and seconds are shorter than minutes.

- People can fly or pigs can sing.

ABG

True propositions are AKA facts. A set of true propositions is called a knowledge base, which is just a database of facts. This set represents what we know about the world (or a subset of the world) or, in other words, the meaning or interpretation we attach to symbols (such as sentences — strings of words). Take a simple world:

- It is true that snow is white.

- It is false that grass is white.

Let’s assign the propositional variables p and q to these propositions

(made pithier) to make it easier to manipulate them:

p= “snow is white”q= “grass is white”

p and not q represents our interpretation of this world. We could imagine a

stranger world — stranger than the real one where we live — where p and

q (i.e., where snow is white and so is grass) or even not p and q (where

snow is green, say, and grass is white) is the real model.

A model is (roughly speaking) the meanings (interpretations) of symbols. In general, a model is all over the map, but in propositional logic, models are assignments of truth values to propositions (conveniently identified as variables).

As aforementioned, truth values are Boolean values, true or false. Period. No partly true or somewhat false, nor any other kind of wishy-washiness.

Decision-making ability is an important component of any programming language. Lisp is no exception. The conditional logic decidering of the basic logical connectives will be revealed by the following functions that you are encouraged to experiment with. The first three are built-in:

and |

or |

not |

xor |

|---|---|---|---|

| ∧ | ∨ | ¬ | ⊕ |

Naturally, not is not a connective in the sense of connecting two

propositions together, but it functions as a logical operator nonetheless. In

everyday life, “not” expresses negation, flipping truth values. When notted

(AKA negated) true becomes false, false becomes true.

For example, the negation of the proposition “Birds are singing” is “Birds are not singing” — or to put it more verbosely, “It is not the case that birds are singing” — which rendition unburdens us of the need to figure out where to insert “not” in the original proposition. We merely prefix any proposition with “It is not the case that” and be done with it. In symbols, prefix any proposition p with ¬ to get ¬ p and flip its truth sense.

For xor, the ‘exclusive’ or, we use the if form to do a

conditional expression with some clever not-using logic:

(defun xor (p q) (if p (not q) q))

We see that this works correctly with all four cases:

(setq true t false nil) (list (xor false false) (xor false true) (xor true false) (xor true true))

(nil t t nil)

UWM

Express in English the negation of each of these propositions:

- Two plus two equals four.

- Two plus one is less than four.

- Toronto is the capital of Germany.

- A total eclipse happens infrequently.

- Special measures must be taken to deal with the current situation.

OPZ

Let p and q be the propositions:

p: I studied.

q: I got an F on the test.

Express each of the propositions below as an English sentence:

- ¬ p

- p ∨ q

- p ∧ q

- ¬ p ∧ ¬ q

- ¬ p ∨ q

- ¬ (p ∨ q)

UOX

What other special forms besides if does elisp have for doing conditionals?

Why are they called “special”?

OTX

Determine whether these sentences use an inclusive or, or an exclusive or:

- A side of fries or chips comes with your sandwich.

- A high school diploma or a GED are needed for this position.

- To get your license, you must provide your social security card or birth certificate.

- We accept Mastercard or Visa credit cards.

- You can cash in your tickets or exchange them for a prize.

- Take it or leave it.

ABH

We can compose or combine propositions to create compound propositions by applying the following rules again and again (consider this a ‘grammar’ for generating these propositional ‘molecules’ from propositional ‘atoms’):

- A

propositionis a variable, that is, a single element from the set[p q r s t ...]; - alternatively, a

propositionis apropositionpreceded by anot; - alternatively, a

propositionis apropositionfollowed by aconnectivefollowed by aproposition. - A

connectiveis a single element from the set[and or xor].

Rules 2 and 3 have a recursive flavor, so said because they refer back to themselves; that is, they define a proposition in terms of itself, and also simpler versions of itself.

We actually need more syntactic structure to make sense of certain compounds. For example, building a proposition from scratch by starting with

proposition;

from thence applying rule 1 (choosing p) then rule 3 yields

p connective proposition;

from thence applying rule 4 (choosing and) yields;

p and proposition;

from thence applying rule 3 again yields

p and proposition connective proposition;

from thence applying 1 twice and 2 once yields

p and q connective not r;

from thence one more application of 4 (choosing or) yields the

not-further-expandable:

p and q or not r.

Which is ambiguous. Does it mean p and s where s is q or not r? Or

does it mean s or not r where s is p and q? In other words, which is the

right interpretation, 1 or 2?

p and (q or not r); associating from the right.(p and q) or not r; associating from the left.

The mathematically astute reader will recognize that the parentheses are the extra syntactic sugar we need to sprinkle on to disambiguate (choose 1 or 2 (but not both)).

The next question is, does it matter? Try it both ways with the truth-value

assignment of p to true, q to false, and r to true:

Replacing p, q, and r with true, false, and true, respectively, in

p and (q or not r)

yields

true and (false or not true).

Before simplifying this expression, a quick review of how these connectives work:

The simplest “connective” first: not flips truth values, true becomes false,

false becomes true. To be true, or requires at least one of the two

propositions appearing on its left-hand-side (LHS) and its right-hand-side

(RHS) to be true. If both of them are false, their disjunction (a fancier

name for or) is false. To be true, and requires both propositions (LHS and

RHS) to be true. If even one of them is false, their conjunction (a fancier

name for and) is false.

Back to the task: the above expression simplifies to

true and (false or false)

from thence to

true and false

from thence to

false.

Replacing propositional variables with their values in

(p and q) or not r

yields

(true and false) or not true

which simplifies to

(true and false) or false

from thence to

false or false

and finally

false.

So they’re the same. But wait! What if we try a different assignment of truth

values? With all of p, q and r false, here is the evolving evaluation

for 1 (p and (q or not r)):

false and (false or not false)false and (false or true)false and truefalse

And here it is for 2 ((p and q) or not r):

(false and false) or not falsefalse or not falsefalse or truetrue

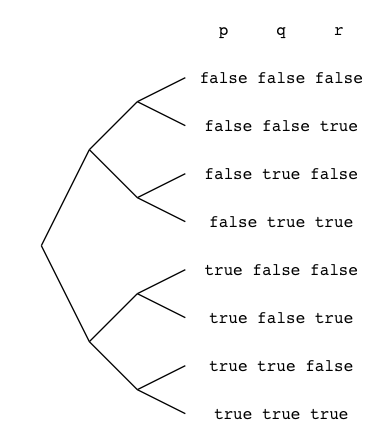

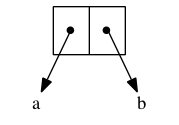

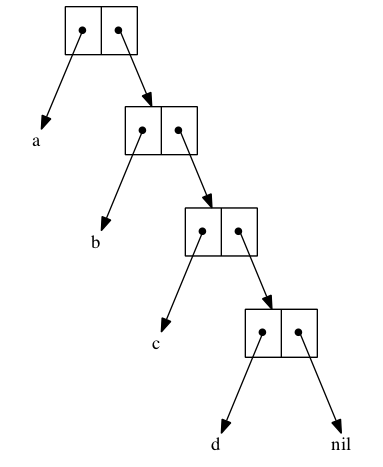

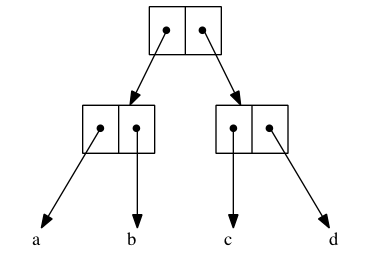

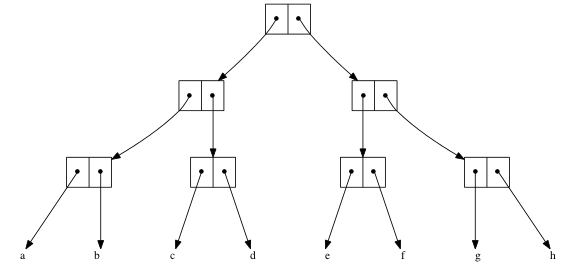

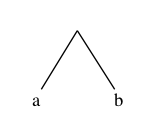

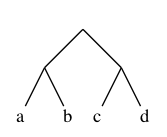

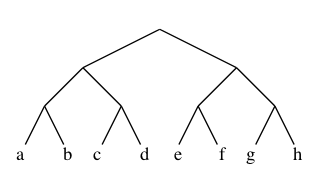

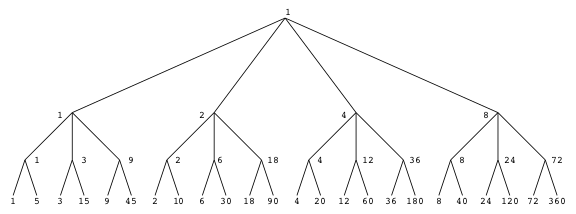

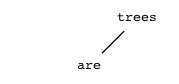

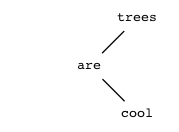

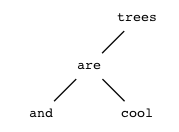

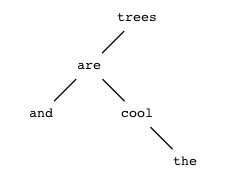

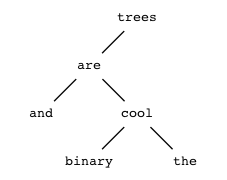

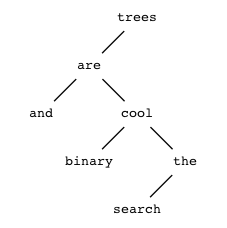

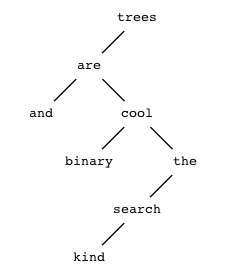

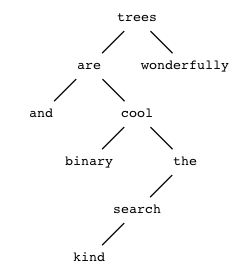

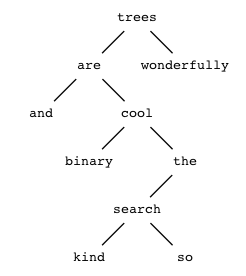

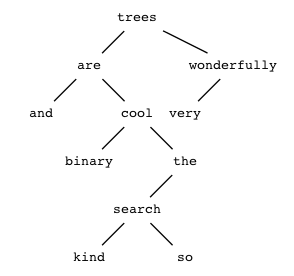

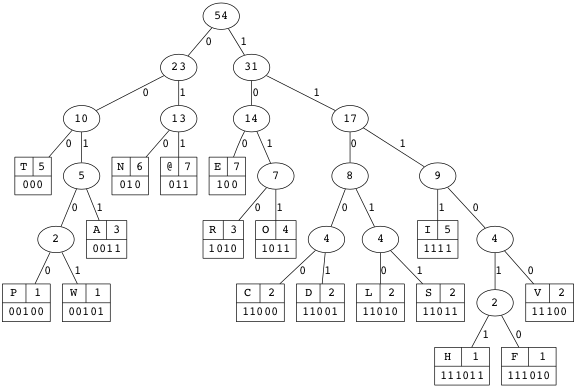

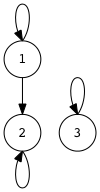

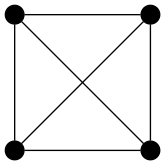

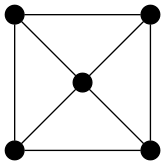

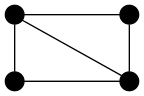

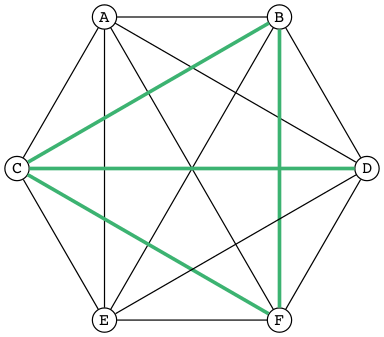

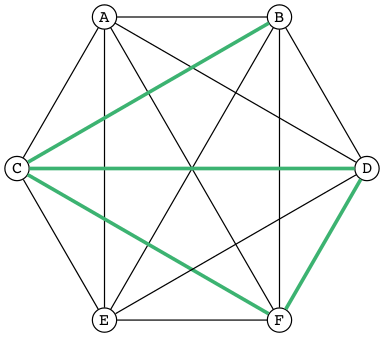

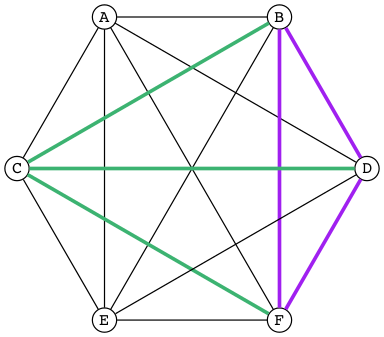

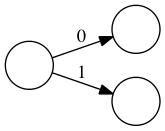

So it’s different — it does matter which form we choose. Let’s tabulate all possible truth-value assignments (AKA models) for each, and see how often they’re the same or not. First problem, how do we generate all possible assignments? Let’s use a decision tree:

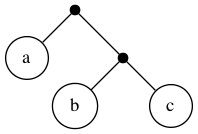

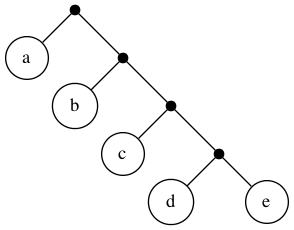

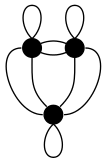

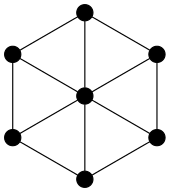

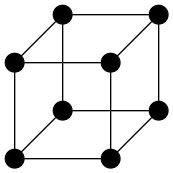

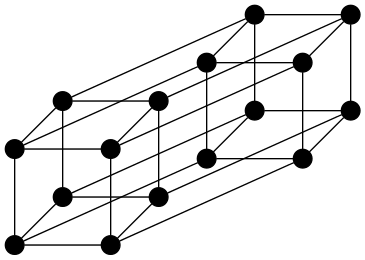

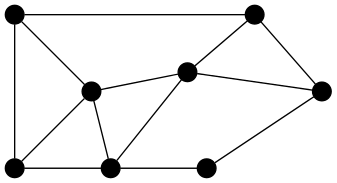

ABI

In this binary tree, each node (wherever it branches up or down) represents

a decision to be made. Branching up represents a choice of false, branching

down true. Going three levels (branch, twig, leaf) gives us the eight possibilities.

In this binary tree, each node (wherever it branches up or down) represents

a decision to be made. Branching up represents a choice of false, branching

down true. Going three levels (branch, twig, leaf) gives us the eight possibilities.

A truth table is a tabulation of possible models — essentially picking off the leaves of the tree from top to bottom (making each leaf a row).

Here’s an example:

| p | q | r | not r | (q or not r) | (p and (q or not r)) |

|---|---|---|---|---|---|

| false | false | false | true | true | false |

| false | false | true | false | false | false |

| false | true | false | true | true | false |

| false | true | true | false | true | false |

| true | false | false | true | true | true |

| true | false | true | false | false | false |

| true | true | false | true | true | true |

| true | true | true | false | true | true |

Numerically it makes sense to have, and indeed most other languages do have, 0

represent false. Lisp is an exception — 0 evaluates to non-nil (which is

not nil — not false). Having 1 represent true has advantages, too,

although MOL treat anything non-zero as true as well. Still, it’s less

typing, so we’ll use it to save space — but keep in mind, the

arithmetization of logic is also instructive because of how it enables

certain useful encodings. Here’s the same truth table in a more compact form:

| (p | ∧ | (q | ∨ | ¬ | r)) |

|---|---|---|---|---|---|

| 0 | 0 | 0 | 1 | 1 | 0 |

| 0 | 0 | 0 | 0 | 0 | 1 |

| 0 | 0 | 1 | 1 | 1 | 0 |

| 0 | 0 | 1 | 1 | 0 | 1 |

| 1 | 1 | 0 | 1 | 1 | 0 |

| 1 | 0 | 0 | 0 | 0 | 1 |

| 1 | 1 | 1 | 1 | 1 | 0 |

| 1 | 1 | 1 | 1 | 0 | 1 |

UOT

Return to the task of comparing the two expressions:

p and (q or not r)(p and q) or not r

Build a truth table of expression 2 and compare it with the truth table of expression 1 given just above.

ABJ

Let it be. Let it go. Let it go so. Let it be so. Make it so.

Let p be prime. In lisp, something like p is called a symbol, and you can

treat a symbol essentially like a variable, although it is much more than

that.

Let something be something is a very mathy way of speaking. So lisp allows

somethings to be other somethings by way of the keyword let.

Like God, who said let there be light, and there was light, the programmer

commands and is obeyed. The keyword let binds symbols to values, fairly

commanding them to stand in for values. Values evaluate to themselves. Symbols

evaluate to the values they are bound to. An unbound symbol cannot be

evaluated. If you try it, an error results.

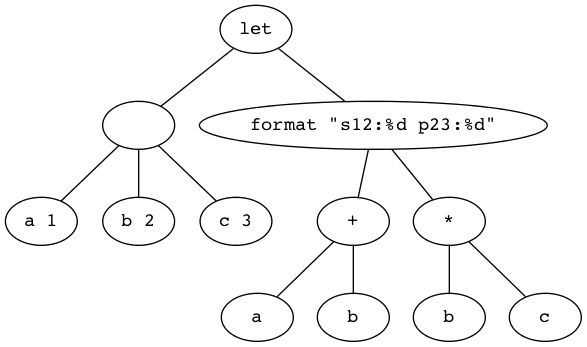

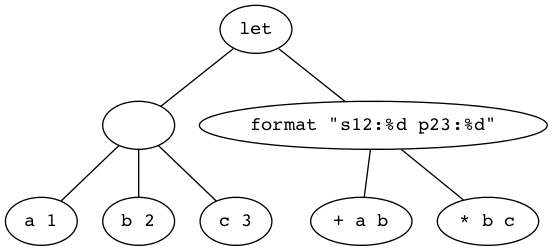

Before doing some exercises, let’s look at let syntactically and abstractly:

(let <frame> <body>)

The <frame> piece is a model in the form of a list of bindings. Just as we

modeled truth-value assignments as, e.g., p is true and q is false, in

list-of-bindings form this would be ((p true) (q false)). A binding is a

two-element list (<var> <val>). Variables can be left unbound, so for

example, (let ((a 1) (b 2) c) ...) is perfectly acceptable. It assumes c

will get a value later, in the <body>.

The <body> is a list of forms to be evaluated — forms that use the

bindings established in the frame model.

The frame list can be empty, as in:

(let () 1 2 3)

Alternatively:

(let nil 1 2 3)

The value of this symbolic expression is 3, the value of the last form before the closing parenthesis (remember, numbers self-evaluate).

ABK

Let’s combine the truth tables for the binary logical operators, ∧, ∨, and ⊕:

| \(\mathsf{p}\) | \(\mathsf{q}\) | \(\mathsf{p} \land \mathsf{q}\) | \(\mathsf{p} \lor \mathsf{q}\) | \(\mathsf{p} \oplus \mathsf{q}\) |

|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 |

| 0 | 1 | 0 | 1 | 1 |

| 1 | 0 | 0 | 1 | 1 |

| 1 | 1 | 1 | 1 | 0 |

Keep these in mind as we explore propositions involving set membership in various forms.

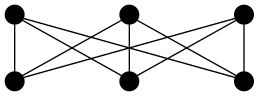

To forge the link between sets and logic, we will go visual and then formal. Drawing pictures helps us visualize the abstract, as set theorists and logicians discovered early on. The most common visual representation is the diagram named after its inventor.

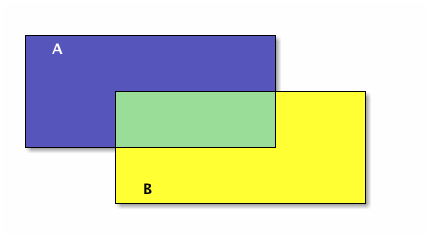

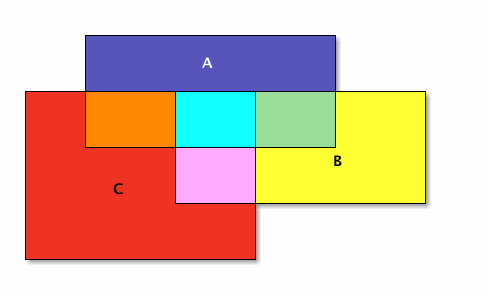

In Venn diagrams, sets are usually represented as circles or ellipses. If they overlap, the shape of the region of overlap is not a circle or ellipse, it is a lens-like shape (for a two-set diagram). Let’s be more consistent and make everything a rectangle. Here are two sets, A drawn as a blue rectangle, B, drawn as a yellow rectangle, and their intersection the green rectangle:

The union of A and B is everything blue:

Let’s do these operations in lisp also:

(setq A [1 2 3 4 5 6 7 8] B [5 6 7 8 9 10 11 12] A-intersect-B [5 6 7 8])

[5 6 7 8]

(setq A [1 2 3 4 5 6 7 8] B [5 6 7 8 9 10 11 12] A-union-B [1 2 3 4 5 6 7 8 9 10 11 12])

[1 2 3 4 5 6 7 8 9 10 11 12]

Now we make A and B smaller so they don’t overlap:

A and B have an empty intersection — no elements in common.

Two sets A and B are disjoint if A intersect B = ∅. More than two sets are pairwise (or mutually) disjoint if no two of them have a non-empty intersection. In other words, pick any two of many sets, the intersection of the pair of them is always empty.

(setq A [1 2 3] B [4 5 6] C [7 8 9] D [10 11 12] A-intersect-B [] A-intersect-C [] A-intersect-D [] B-intersect-C [] B-intersect-D [] C-intersect-D [])

[]

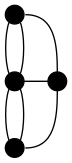

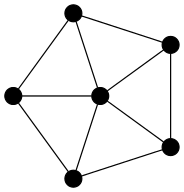

In the following figure A, B, and C are mutually disjoint:

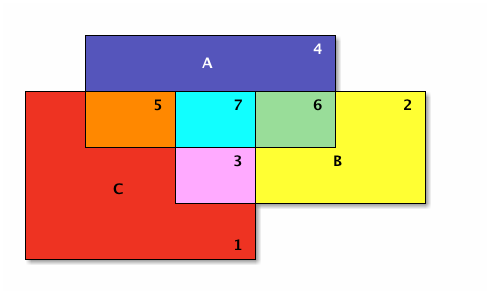

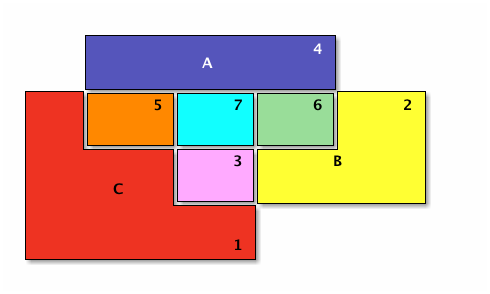

Now make them bigger so they overlap, and thus are no longer pairwise disjoint:

The seven different-colored regions have different set operations that define them, shown in a table after defining the different set operations.

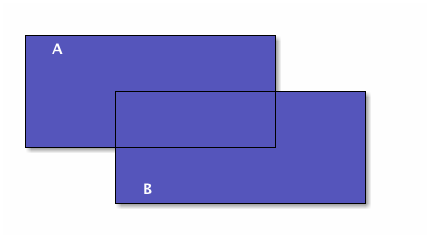

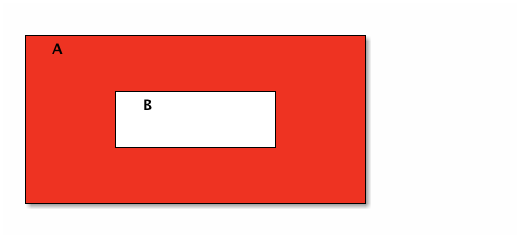

First let’s go back to two sets and make B entirely contained in A, in other words, every element of B is also an element of A (but not vice-versa):

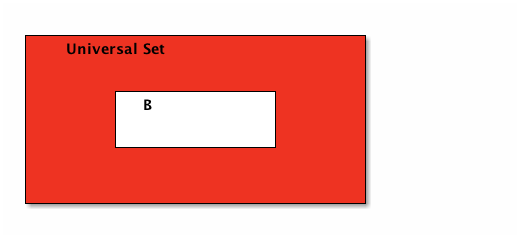

In all of these diagrams, we haven’t been showing but rather leaving implied the Universal set that is outside all of the drawn sets. To show set complement, however, it helps to have an explicit Universal set. Let’s promote set A to be the Universal set.

Set B’s complement is the red region; i.e., everything in the universe except what’s in B.

Now let’s formally define these set operations and see how they correspond to logic operations. If A and B are sets, then:

The union of A and B, denoted A \(\cup\) B, is the set with members from A or B or both.

The intersection of A and B, denoted A ∩ B, is the set with members in common with A and B.

The difference of A and B, denoted A \ B, is the set with members from A but not B.

The complement of B, denoted \(\overline{\mathsf{B}}\), is the set with members not in B.

Note that if an element is in A but not in B, or if it is in B but not in A, then it is not in the intersection of A and B.

The overlapping 3-sets colorfully introduced above is reproduced below with numeric identifiers added for easier cross-referencing in the table that follows:

| Number | Color | Set | A | B | C |

|---|---|---|---|---|---|

| 1 | Red | \(\overline{\mathsf{A}} \cap \overline {\mathsf{B}} \cap \mathsf{C}\) | 0 | 0 | 1 |

| 2 | Yellow | \(\overline{\mathsf{A}} \cap \mathsf{B} \cap \overline{\mathsf{C}}\) | 0 | 1 | 0 |

| 3 | Pink | \(\overline{\mathsf{A}} \cap \mathsf{B} \cap \mathsf{C}\) | 0 | 1 | 1 |

| 4 | Blue | \(\mathsf{A} \cap \overline{\mathsf{B}} \cap \overline{\mathsf{C}}\) | 1 | 0 | 0 |

| 5 | Orange | \(\mathsf{A} \cap \overline{\mathsf{B}} \cap \mathsf{C}\) | 1 | 0 | 1 |

| 6 | Green | \(\mathsf{A} \cap \mathsf{B} \cap \overline{\mathsf{C}}\) | 1 | 1 | 0 |

| 7 | Sky Blue | \(\mathsf{A} \cap \mathsf{B} \cap \mathsf{C}\) | 1 | 1 | 1 |

OIM

What is the correlation between the first three columns and the last three columns in this table?

UIN

What is the set difference between the set of letters in the English alphabet and the set of letters in the Hawaiian alphabet?

ABL

Now let p be the proposition ‘x ∈ A’ and let q be the proposition ‘x ∈ B’. Recall that ‘∈’ means “is in” and ‘∉’ means “is not in” and observe:

| Logical Statement | Equivalent Set Operation Statement |

|---|---|

| ¬ p | \(x \notin \mathsf{A}\) (or \(x \in \overline{\mathsf{A}}\)) |

| p ∨ q | \(x \in \mathsf{A} \cup \mathsf{B}\) |

| p ∧ q | \(x \in \mathsf{A} \cap \mathsf{B}\) |

| p ∧ ¬ q | \(x \in \mathsf{A} \setminus \mathsf{B}\) (or \(\mathsf{A} \cap \overline{\mathsf{B}}\)) |

| p ⊕ q | \(x \in (\mathsf{A} \cup \mathsf{B}) \setminus (\mathsf{A} \cap \mathsf{B})\) |

This last row, the xor logic operator, corresponds to what is called the

symmetric difference of A and B. With that, it is time to bring De

Morgan’s laws for logic and sets into the picture, first making explicit the

correspondence between logical and set operations:

| Logic | Symbol | Symbol | Set |

|---|---|---|---|

| conjunction | ∧ | \(\cap\) | intersection |

| disjunction | ∨ | \(\cup\) | union |

| negation | ¬ | \(\overline{\hspace{1em}}\) | complement |

De Morgan’s laws for logic state that the negation of a conjunction is the disjunction of negations; likewise, the negation of a disjunction is the conjunction of negations. De Morgan’s laws for sets state that the complement of an intersection is the union of complements; likewise, the complement of a union is the intersection of complements. In symbols:

| Logic | Equivalent Logic | Sets | Equivalent Sets |

|---|---|---|---|

| ¬ (p ∧ q) | ¬ p ∨ ¬ q | \(\overline{\mathsf{A} \cap \mathsf{B}}\) | \(\overline{\mathsf{A}} \cup \overline{\mathsf{B}}\) |

| ¬ (p ∨ q) | ¬ p ∧ ¬ q | \(\overline{\mathsf{A} \cup \mathsf{B}}\) | \(\overline{\mathsf{A}} \cap \overline{\mathsf{B}}\) |

| p ⊕ q | p ∨ q ∧ ¬ (p ∧ q) | \((\mathsf{A} \cup \mathsf{B}) \cap \overline{\mathsf{A} \cap \mathsf{B}}\) | \((\mathsf{A} \cup \mathsf{B}) \cap (\overline{\mathsf{A}} \cup \overline{\mathsf{B}})\) |

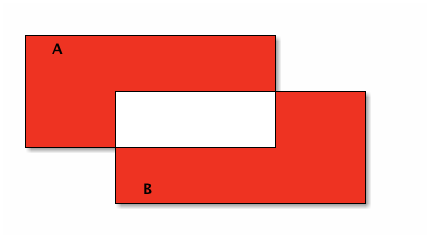

In pictures, the symmetric difference of A and B is everything red, and

this set operation corresponds directly to the ⊕ (xor) logic operation:

Note that the upper red region corresponds to A ∖ B, and the lower red region corresponds to B ∖ A. The union of these two differences is the symmetric difference, just as the exclusive or is one or the other, but not both.

Let’s redraw our colorful Venn diagram to emphasize the separation between each numbered (colored) region — separate in the sense that each is a non-overlapping subset.

With the separateness of the numbered regions made clear, we can reconstruct each set in terms of unions of those regions.

OJL

Justify where \(p = x \in A, q = x \in B, r = x \in C\):

| Set | As Union of | Logic ≡ Set Operation Description |

|---|---|---|

| A | 4 ∪ 5 ∪ 7 ∪ 6 | \((p \land \lnot q \land \lnot r) \lor (p \land \lnot q \land r) \lor (p \land q \land r) \lor (p \land q \land \lnot r)\) |

| B | 2 ∪ 3 ∪ 7 ∪ 6 | \((\lnot p \land q \land \lnot r) \lor (\lnot p \land q \land r) \lor (p \land q \land r) \lor (p \land q \land \lnot r)\) |

| C | 1 ∪ 3 ∪ 5 ∪ 7 | \((\lnot p \land \lnot q \land r) \lor (\lnot p \land q \land r) \lor (p \land \lnot q \land r) \lor (p \land q \land r)\) |

UCG

Let set A = [verve vim vigor], set B = [butter vinegar pepper vigor].

For each of the following set operations, give its resulting members (as a

vector of symbols):

- The set of words that are in A or B; call this set C.

- The set of words that are in A and B; call this set D.

- The subset of set C of words that start with ‘v’.

- The subset of set C of words that end with ‘r’.

- The subset of set C of words that start with ‘v’ and end with ‘r’.

- The subset of set D of words that have six letters.

ABM

Now, the fact of B being ‘entirely contained’ in A shown above has another shorter way to say it, which note involves a conditional:

B is a subset of A, denoted B \(\subseteq\) A, means that if \(x \in\) B then \(x \in\) A.

A is a superset of B, denoted A \(\supseteq\) B, means that B is a subset of A.

B is a proper subset of A, denoted B \(\subset\) A, means that there is some element of A that is not in B. In other words, A is strictly speaking ‘bigger’ than B.

A is a proper superset of B, denoted A \(\supset\) B, means that B is a proper subset of A.

| Logical Statement | Equivalent Set Statement |

|---|---|

| p → q | \(\mathsf{A} \subseteq \mathsf{B}\) |

| p ← q (or q → p) | \(\mathsf{A} \supseteq \mathsf{B}\) (or \(\mathsf{B} \subseteq \mathsf{A}\)) |

| p ↔ q | \(\mathsf{A} \subseteq \mathsf{B} \land \mathsf{B} \subseteq \mathsf{A}\) (or \(\mathsf{A} = \mathsf{B}\)) |

The last row’s biconditional means that if each of two sets is a subset of the other, then the sets are equal — they are the same set. If we turn sets into numbers (by lowercasing their names) then this is entirely analogous to saying: a ≤ b ∧ b ≤ a → a = b. While thinking of numbers, another analogy that is helpful is to see the subset relation as a “less-than-or-equal-to” relation, which mirrors the similarity of the symbols, so \(\mathsf{A} \subseteq \mathsf{B} \Leftrightarrow \mathsf{a} \le \mathsf{b}\). Not just analogously, but in actuality thus it is if a denotes the size of (number of elements in) A, written as a = | A | (note the overloaded use of the vertical bars), and ditto for b = | B |.

OIO

Here is a “setq chain” illustrating subset size compared to superset size:

(setq A [s i z e] a 4 B [b i g g e r i n s i z e] b 12 A-is-a-subset-of-B t a-is-less-than-or-equal-to-b t)

Write or find built-in functions so that you can revise this chain of

assignments to avoid the literals 4, 12 and t, instead replacing

them with function calls.

UIJ

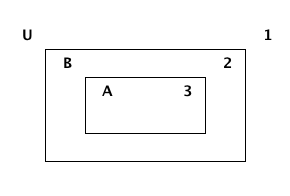

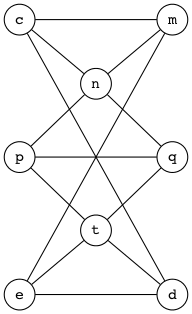

The following Venn diagram numbers three regions of a set B with a subset A relationship within a Universal set U:

Make a connection between the logical conditional operator (→) and the definition of a subset. Refer to the three numbered regions in your answer.

OQT

Let p and q be the propositions:

p: I studied.

q: I got an A on the test.

Express each of the propositions below as an English sentence:

- p → q

- ¬ p ∨ ¬ q

- ¬ p → (p ∨ q)

- ¬ p → ¬ q

UTQ

Let p and q be the propositions:

p: You applied for admission at BYU-Idaho.

q: You were accepted.

Express these sentences as propositions using logical connectives.

- You applied for admission at BYU-Idaho and were accepted.

- You did not apply for admission at BYU-Idaho but were still accepted.

- You applied for admission at BYU-Idaho but were not accepted.

- Either you did not apply for admission at BYU-Idaho and didn’t get accepted or you did apply and got accepted.

OYU

There are many different ways in English to put the same conditional statement, ‘if p then q’. Here are four:

- omit the ‘then’

- if p, q

- reversed

- q if p

- ‘whenever’ means ‘if’

- whenever p, q (or q whenever p)

- p → q

- (pronounced) p only if q.

Find at least four other ways.

UOH

Let p and q be the propositions:

p: You applied for admission at BYU-Idaho.

q: You were accepted.

Express these sentences as propositions using logical connectives:

- Your applying for admission at BYU-Idaho is necessary for your being accepted.

- Your being accepted is a sufficient condition for your applying for admission at BYU-Idaho.

- Your applying for admission at BYU-Idaho is necessary and sufficient for your being accepted.

OZD

Determine whether these conditional statements are true or false:

- If 2 + 2 = 4, then pigs can fly.

- If 2 + 7 = 5, then Elvis Presley is still alive.

- If pigs can fly, then dogs can’t fly.

- If dogs have four legs, then ostriches have two legs.

UZM

Determine whether these biconditionals are true or false:

- 2 + 1 = 3 if and only if 1 + 2 = 3.

- 1 + 2 = 3 if and only if 3 + 1 = 6.

- 1 + 3 = 2 if and only if the earth is flat.

- 1 < 2 if and only if 2 < 3.

OOY

Write each of these sentences as ‘if p, then q’ statements:

- It is necessary to sign up to win the contest.

- I get a cold whenever I go outside.

- It is sufficient to be an A student to receive the scholarship.

- As long as you leave now, you will get there on time.

- I’ll get half off if I act now.

UFZ

Investigate the variations of conditional statements known as the converse, the inverse and the contrapositive.

OKJ

Write the converse, inverse, and contrapositive of each of these statements:

- If it rains today, we won’t go to the park.

- If you do your homework, I’ll give you a pat on the back.

- Whenever I babysit, I get sick.

- Every time there is a quiz, I go to class.

- I wake up late when I stay up past my bedtime.

UVH

Construct a truth table for each of these propositions:

- \(\mathsf{p} \rightarrow \mathsf{q}\)

- \(\mathsf{p} \oplus \mathsf{q}\)

- \(\mathsf{p} \rightarrow \lnot \mathsf{q}\)

- \(\lnot \mathsf{p} \rightarrow \mathsf{q}\)

- \(\mathsf{p} \wedge \lnot \mathsf{q}\)

OJM

Construct a truth table for each of these propositions:

- \(\mathsf{p} \rightarrow (\lnot \mathsf{p})\)

- \(\mathsf{p} \leftrightarrow \mathsf{q}\)

- \(\mathsf{p} \leftrightarrow (\lnot \mathsf{p})\)

- \(\mathsf{p} \land \mathsf{p}\)

- \(\mathsf{p} \lor \mathsf{p}\)

ULQ

Construct a truth table for (p → q) → (q → r) → (r → s).

OTD

Construct a truth table for (p ∨ q) ∧ (¬ p ∨ r) → (q ∨ r).

ABN

Being a logical language, the propositional calculus obeys certain laws. The main law is that some propositions are logically equivalent to others.

Two compound propositions \(p\) and \(q\) are logically equivalent, signified

\(p \equiv q\)

when they have the same truth values under every model. This equivalence is expressed operationally by saying that the biconditional

\(p \leftrightarrow q\)

is always true.

Special terms exist for this type of iff, its opposite, and neither it nor its opposite:

A tautology is a proposition that is always true.

A contradiction is a proposition that is always false.

A contingency is a proposition that is neither a tautology nor a contradiction, but is sometimes true, sometimes false.

An example of each:

- p ∨ ¬ p

- is a tautology.

- p ∧ ¬ p

- is a contradiction.

- p → q

- is a contingency.

UJU

Use truth tables to verify the commutative laws.

- \(p \vee q \equiv q \vee p\)

- \(p \wedge q \equiv q \wedge p\)

OLH

Use truth tables to verify the associative laws.

- \((p \vee q) \vee r \equiv p \vee (q \vee r)\)

- \((p \wedge q) \wedge r \equiv p \wedge (q \wedge r)\)

UWY

Use truth tables to verify the distributive laws.

- \(p \wedge (q \lor r) \equiv (p \wedge q) \lor (p \wedge r)\).

- \(p \vee (q \wedge r) \equiv (p \vee q) \wedge (p \vee r)\).

OBW

Use truth tables to verify De Morgan’s laws:

- \(\neg (p \vee q) \equiv \neg p \wedge \neg q\)

- \(\neg (p \wedge q) \equiv \neg p \vee \neg q\)

USL

Use truth tables to verify some miscellaneous laws, letting \(\mathbf{1}\) = true, \(\mathbf{0}\) = false:

- \(p \land \mathbf{1} \equiv p\) (idempotence)

- \(p \lor \mathbf{0} \equiv p\) (idempotence)

- \(\neg \neg p \equiv p\) (double negation)

- \(p \land \mathbf{0} \equiv \mathbf{0}\) (absorption)

- \(p \lor \mathbf{1} \equiv \mathbf{1}\) (absorption)

OYP

Match the following set identities with their counterparts in the miscellaneous logic laws:

- A ∩ U = A

- A ∪ U = U.

- A ∪ ∅ = A.

- A ∩ ∅ = ∅.

- \(\overline{\overline{\mathsf{A}}} = \mathsf{A}\).

UBV

Use DeMorgan’s Laws to find the negations of the propositions:

- Winning the first round is necessary for winning the trophy.

- Winning the tournament is sufficient for winning the trophy.

- I am powerful and successful.

- You can pass or fail this test.

- Getting an A on the final exam is necessary and sufficient for passing this class.

OKQ

Show that \((p \leftrightarrow q) \wedge (p \leftrightarrow r)\) and \(p \leftrightarrow (q \wedge r)\) are logically equivalent.

UKF

Show that \(\neg(p \leftrightarrow q)\) and \(p \leftrightarrow \neg q\) are logically equivalent.

OKL

Determine if \((p \vee q) \wedge (\neg p \vee r) \rightarrow (q \vee r)\) is a tautology.

UEZ

Find a compound proposition involving the propositional variables p, q, and r that is true when p and q are true and r is false, but is false otherwise.

ABO

After some good practice constructing truth tables, the time is ripe for exercising some code in a truth-table generator context:

OYW

(defun --> (p q) "Conditional: p only if q" (or (not p) q)) (defun <--> (p q) "Biconditional: p if and only if q" (and (--> p q) (--> q p))) (defun valid-connective (op) (or (eq op 'and) (eq op 'or) (eq op 'xor) (eq op '-->) (eq op '<-->)))

The prop-eval function evaluates propositions that are based on vectors of

symbols, prompting the user for their truth values, combining them two-by-two

with the valid connectives, and minimally formatting the output. It even

checks for errors!

(defun prop-eval (prop) (unless (and (vectorp prop) (= 3 (length prop)) (valid-connective (elt prop 1))) (error "bad parameters")) (let* ((op (elt prop 1)) (l (eval (elt prop 0))) (r (eval (elt prop 2))) (lval (y-or-n-p (mapconcat 'symbol-name l " "))) (rval (y-or-n-p (mapconcat 'symbol-name r " "))) (result (eval (list op lval rval)))) (list l (list lval) op r (list rval) 'yields result)))

Try it with a couple of compound propositions:

(let* ((p [It is raining]) (q [The grass is wet]) (p-and-q [p and q])) (prop-eval p-and-q))

([It is raining] (t) and [The grass is wet] (t) yields t)

(let* ((p [It is raining]) (q [The grass is wet]) (p-onlyif-q [p --> q])) (prop-eval p-onlyif-q))

([It is raining] (t) --> [The grass is wet] (nil) yields nil)

You try it with the other three connectives.

UEF

Study this fancier version of prop-eval (and supporting cast). Note that

this code only works for simple propositions consisting of three symbols — l

op r, to abbreviate. How would you make it handle more complex propositions?

(defun stringify (prop) (let* ((str (mapconcat 'symbol-name prop " "))) (downcase (substring str 0 (- (length str) 1))))) (defun fancier-prompt (str) (let* ((prompt (concat "Is it the case that " str "? ")) (answer (y-or-n-p-with-timeout prompt 5 t))) (princ (format "Given %s is %s\n" str (if answer 'true: 'false:))) answer)) (defun fancier-output (result l op r) (princ (format "It is %s that %s %s %s.\n" (if result 'true 'false) l op r))) (defun prop-eval (prop) (unless (and (vectorp prop) (= 3 (length prop)) (valid-connective (elt prop 1))) (error "bad parameters")) (let* ((op (elt prop 1)) (l (eval (elt prop 0))) (r (eval (elt prop 2))) (lstr (stringify l)) (rstr (stringify r)) (lval (fancier-prompt lstr)) (rval (fancier-prompt rstr)) (result (eval (list op lval rval)))) (fancier-output result lstr op rstr)))

Note the periods to close sentences properly and allow stringify to do its

job better:

(let* ((p [It is raining.]) (q [The grass is wet.]) (p-onlyif-q [p --> q])) (prop-eval p-onlyif-q))

Given it is raining is true: Given the grass is wet is true: It is true that it is raining --> the grass is wet.

OUB

Implement the reverse conditional function (<--).

Find two shorter ways to correctly implement the biconditional function (<-->).

UQY

This is an exercise in implementing logic gate Boolean functions to generate truth tables of compound Boolean functions.

Specifically, your task is to implement the not1, or2 and and2

functions, arithmetically (i.e., do not use any conditional logic — if

or cond or the like). Use only the operators +, -, and *. The inputs to

these three functions will be zeros or ones only. You’ll know you got it right

when you only get t from evaluating the final three code blocks.

(defun not1 (x) ;; implement me ) (defun and2 (x y) ;; implement me ) (defun or2 (x y) ;; implement me )

(defun truth-table-row-inputs (i) (elt [[0 0 0] [0 0 1] [0 1 0] [0 1 1] [1 0 0] [1 0 1] [1 1 0] [1 1 1]] i)) (defun truth-table-row-with-output (i func) (let* ((inputs (append (truth-table-row-inputs i) nil)) (output (apply func inputs))) (apply 'vector (append inputs (list output))))) (defun f1 (p q r) (or2 (and2 p q) (not1 r))) (defun f2 (p q r) (and2 p (or2 q (not1 r)))) (defun f3 (p q r) (or2 p (and2 q r))) (defun generate-truth-table-for (func) (vector (truth-table-row-with-output 0 func) (truth-table-row-with-output 1 func) (truth-table-row-with-output 2 func) (truth-table-row-with-output 3 func) (truth-table-row-with-output 4 func) (truth-table-row-with-output 5 func) (truth-table-row-with-output 6 func) (truth-table-row-with-output 7 func)))

(equal (generate-truth-table-for 'f1) [[0 0 0 1] [0 0 1 0] [0 1 0 1] [0 1 1 0] [1 0 0 1] [1 0 1 0] [1 1 0 1] [1 1 1 1]])

(equal (generate-truth-table-for 'f2) [[0 0 0 0] [0 0 1 0] [0 1 0 0] [0 1 1 0] [1 0 0 1] [1 0 1 0] [1 1 0 1] [1 1 1 1]])

(equal (generate-truth-table-for 'f3) [[0 0 0 0] [0 0 1 0] [0 1 0 0] [0 1 1 1] [1 0 0 1] [1 0 1 1] [1 1 0 1] [1 1 1 1]])

DEF

Do Everything First with Functions.

Dueling Priorities — Which Will Win?

(Work Will Win When Wishy-Washy Wishing Won’t!)

DEG

Til has assigned a lot of homework. Ila complains to Abu about it. Abu is sympathetic.

“I mostly get the logical connectives, but why we don’t just call them operators is what I don’t get. They’re the same as the logical operators in JavaScript, and using a different word …”

Abu interrupted Ila’s rant. “What’s JavaScript?”, he asked.

“It’s a programming language — the one I mostly program in. Anyway, what I also don’t get is the conditional operator. It just seems illogical that it’s defined the way it is. How did Til put it?”

“I believe he said ‘p only if q is true, except when p is more true than q’,” said Abu.

“That’s what I’m talking about, what does ‘more true than’ mean? Something is either true or it’s not. How can it be ‘more true’? Truer than true??”

“Well, here’s how I see it,” said Abu. “When they have the same truth value, both true or both false, neither is more or less than the other, they’re equal.”

Ila felt her face go red. “Oh, now I get it — a true p is greater than a false q. And the last case, when p is false and q is true, p is less true than q. Way less, because it has zero truth.”

“Irrelevant to the truth of q,” said Abu. “I drew its truth table, like Til asked, and only one row is false, the rest true. Perhaps you drew it this way too?”

| p | q | p → q |

|---|---|---|

| 0 | 0 | 1 |

| 0 | 1 | 1 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

Ila frowned. “Mine has the last column centered under the arrow, but otherwise it’s the same as yours.”

“I like centered better,” said Abu. “Til will be glad we get it, I think, but do you think he was going to explain it some more?”

“He will if we ask, but I don’t want to ask him — will you?” said Ila.

“No problem,” said Abu. “I don’t mind appearing ignorant, because appearances aren’t deceiving in my case — I really am ignorant!”

“Well, I hate asking dumb questions,” said Ila.

“Never been a problem for me,” said Abu with a slow exhalation.

Ila voiced her other main complaint. “And I’m still floored that he said, go learn lisp, it will do you good!”

Abu nodded. “I agree that seems like a lot to ask, but I’m enjoying the challenge. Lisp is cool!”

Ila sighed. “It certainly lives up to its name: Lots of Irritatingly Silly Parentheses, and if you ask me, it’s just confusing.”

Abu smiled. “I found this xkcd comic that compares lisp to a Jedi’s lightsaber. See, I got the Star Wars reference. And by the way, just to show you what planet I’m from, I went and watched Aladdin. So let’s see, Chewbacca is Abu to Han Solo’s Aladdin, right!”

“Hardly,” said Ila. “So you saw the movie and you still want us to call you Abu?!”

DEH

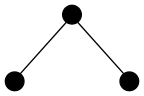

“Think of it this way,” said Til. “Inside versus Outside. With your mind’s eye, visualize a couple of circles, two different sizes, the smaller inside the larger. Make them concentric. Okay? Now consider the possibilities. Can something be inside both circles simultaneously?”

Abu and Ila nodded simultaneously.

“That’s your p-and-q-both-true case. Now, can something be outside both circles? Yes, obviously. That’s your p-and-q-both-false case. And can something be inside the outer circle without being inside the inner one? Yes, and that’s when p is false and q is true.”

Ila was feeling a tingle go up her spine. She blurted, “I see it! The only impossibility is having something inside the inner circle but not inside the outer — p true and q false. Makes perfect sense!”

Til beamed. “You got it, Ila! How about you, Abu?”

Abu just smiled and nodded his head.

“Would you please explain ‘let’ more?” said Ila. She was getting past her reluctance to ask questions, and it felt great!

“Think cars,” said Til. “A car has a body built on a frame, just like a let has a body built on a frame. But without an engine and a driver, a car just sits there taking up space. The lisp interpreter is like the engine, and you, the programmer, are the driver.”

Abu had a thought. “A computer also has an engine and a driver, like processor and programmer, again. But cars contain computers, so I doubt equating a car with a computer makes sense.”

Til agreed. “You see a similarity but also a big difference in the two. A car is driven, but the only thing that results is moving physical objects (including people) from here to there. That’s all it does, that’s what it’s designed to do. But a computer is designed to do whatever the programmer can tell it to do, subject to the constraints of its design, which still — the space of possibilities is huge.”

Ila was puzzled. “Excuse me, but what does this have to do with ‘let’?”

Til coughed. “Well, you’re right, we seem to be letting the analogy run wild. Running around inside a computer are electrons, doing the ‘work’ of computation, electromagnetic forces controlling things just so. It’s still electromagnetics with a car, for example, combustion of fuel. But these electromagnetic forces are combined with mechanical forces to make the car go.”

“Just think of ‘let’ as initiating a computational process, with forces defined by the lisp language and interacting via the lisp engine with the outside world. Then letting computers be like cars makes more sense. Do you recall that a lisp program is just a list?”

Abu nodded, but interjected. “It’s really a list missing its outermost parentheses, right?”

Til’s glint was piercing. “Ah, but there’s an implied pair of outermost parentheses!”

Ila fairly stumbled over her tongue trying to blurt out. “And a ‘let nil’ form!”

Now it was Abu’s turn to be puzzled. He said, “Wait, why nil?”

Ila rushed on, “It just means that your program can be self-contained, with nil extra environment needed to run. Just let it go, let it run!”

DEI

Let’s leave ‘let’ for now, and take another look at lisp functions that we can define via the defun special form.

(defun <parameter-list> [optional documentation string] <body>)

A few examples of defining functions via defun were given above and in the

elisp mini-primer. Note that this language feature is convenient, but not

strictly necessary, because any function definition and call can be replaced

with a let form, where the frame bindings are from the caller’s

environment.

For example, the function list-some-computations-on, defined and invoked

(another way to say called) like this:

(defun list-some-computations-on (a b c d) "We've seen this one before." (list (+ a b) (/ d b) (- d a) (* c d))) (list-some-computations-on 1 2 3 4)

This definition/call has exactly the same effect as:

(let ((a 1) (b 2) (c 3) (d 4)) (list (+ a b) (/ d b) (- d a) (* c d)))

Of course, any procedural (or object-oriented) programmer knows why the first way is preferable. Imagine the redundant code that would result from this sequence of calls if expanded as above:

(list-some-computations-on 1 2 3 4) (list-some-computations-on 5 6 7 8) ;;... (list-some-computations-on 97 98 99 100)

Functions encapsulate code modules (pieces). Encapsulation enables modularization. The modules we define as functions become part of our vocabulary, and a new module is like a new word we can use instead of saying what is meant by that word (i.e., its definition) all the time.

But stripped down to its core:

A function is just an object that takes objects and gives other objects.

Speaking completely generally, these are objects in the ‘thing’ sense of the word, not instances of classes as might be the case if we were talking about object-oriented programming.

The question arises: Are there any restrictions on what objects can be the inputs to a function (what it takes) and what objects can be the outputs of a function (what it gives)? Think about and answer this question before looking at the endnote.

Again, a function is simply an object, a machine if you will, that produces outputs for inputs. Many terms describing various kinds and attributes of functions and their machinery have been designed and pressed into mathematical and computer scientific service over the years:

- domain is the term for the set of all possible inputs for a function. (So functions depend on sets for their complete and rigorous definitions.)

- codomain is the set of all possible outputs for a function.

- range is the set of all actual outputs for a function (may be different — smaller — than the codomain).

- onto describes a function whose range is the same as its codomain (that is, an onto function produces (for the right inputs) all possible outputs).

- surjective is a synonym for onto.

- surjection is a noun naming a surjective function.

- one-to-one describes a function each of whose outputs is generated by only one input.

- injective is a synonym for one-to-one.

- injection is a noun naming an injective function.

- bijective describes a function that is both one-to-one and onto.

- bijection is a noun naming a bijective function.

- recursive describes a function that calls itself.

- arguments are the inputs to a function (argument “vector” (argv)).

- arity is short for the number of input arguments a function has (argument “count” (argc)).

- k-ary function — a function with k inputs.

- unary – binary – ternary — functions with 1, 2 or 3 arguments, respectively.

- infix notation describes a binary function that is marked as a symbol between its two arguments (rather than before).

- prefix notation describes a k-ary function that is marked as a symbol (such as a word (like list)) before its arguments, frequently having parentheses surrounding the arguments, but sometimes (like in lisp) having parentheses surrounding symbol AND arguments.

- postfix notation describes a function that is marked as a symbol after its arguments. Way less common.

- image describes the output of a function applied to some input (called the pre-image of the output).

DEJ

So the image of 2 under the square function is 4. The square function’s pre-image of 4 is 2. Since the square function (a unary, or arity 1 function) is a handy one to have around, we defun it next:

(defun square (number) "Squaring is multiplying a number by itself, AKA raising the number to the power of 2." (* number number))

There are many one-liners just itching to be defuned (documentation string (doc-string for short) deliberately omitted):

(defun times-2 (n) (* 2 n)) (defun times-3 (n) (* 3 n))

Let’s give one we’ve seen before a doc-string, if only to remind us what the ‘x’ abbreviates:

(defun xor (p q) "Exclusive or." (if p (not q) q))

How about ‘not’ with numbers?

(defun not-with-0-1 (p) (- 1 p))

As written, all of these one-liner functions are problematic, take for example

the last one. The domain of not-with-0-1 is exactly the set [0 1], and

exactly that set is its codomain (and range) too. Or that’s what they should

be. Restricting callers of this function to only pass 0 or 1 is a non-trivial task,

but the function itself can help by testing its argument and balking if given

something besides 0 or 1. Balking can mean many things — throwing an

exception being the most extreme.

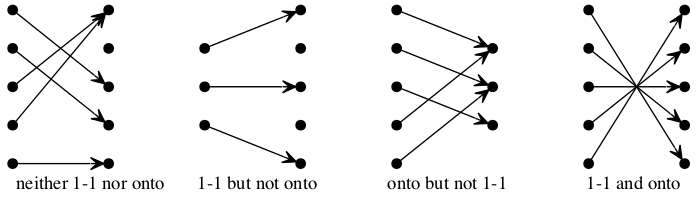

We next examine conditions for functions being onto versus one-to-one.

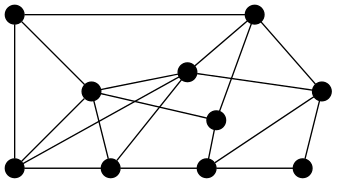

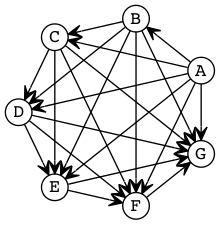

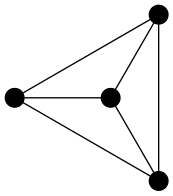

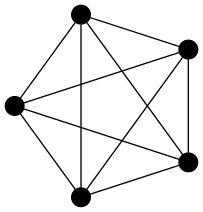

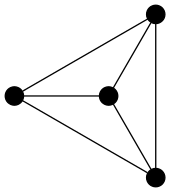

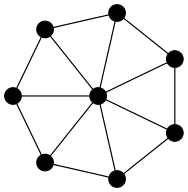

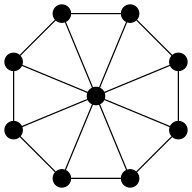

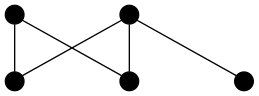

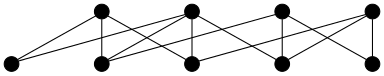

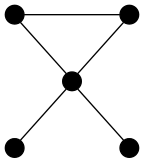

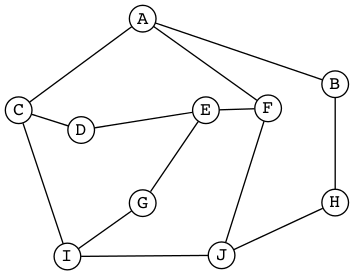

A necessary condition for a function to be onto is that the size of its codomain is no greater than the size of its domain. In symbols, given f : A → B, Onto(f) → |A| ≥ |B|. A necessary (but not sufficient) condition for a function to be bijective is for its domain and codomain to be the same size. We verify these claims pictorially by way of the following examples — simple abstract functions from/to sets with three or five elements:

TBD

A function is one-to-one if and only if it is onto, provided its domain and codomain are the same size.

Put this statement in symbolic form using the adjectives injective and surjective, and argue for its truth using the following formal definitions:

A function from domain A to codomain B

f : A → B

is

- injective

- if f(m) ≠ f(n) whenever m ≠ n, for all m ∈ A and for all n ∈ A;

and/or is

- surjective

- if for all b ∈ B there exists an a ∈ A such that f(a) = b.

DEK

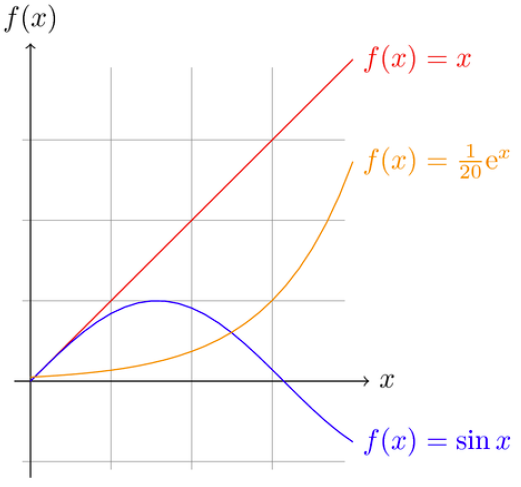

We’ve been viewing a function a certain way, mostly with a programming bias, but keep in mind that functions can be viewed in many other ways as well. The fact that functions have multiple representations is a consequence of how useful each representation has proven to be in its own sphere and realm. A function is representable by each of the following:

- assignment

- association

- mapping (or map)

- machine

- process

- rule

- formula

- table

- graph

and, of course,

- code

These representations (mostly code) will be illustrated through examples and exercises. The bottom line: mathematical functions are useful abstractions of the process of transformation. Computer scientific functions follow suit.

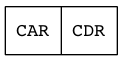

We first introduce one of the most prominent functional-programming-language features of lisp or indeed any language claiming to be functional:

A lambda is an “anonymous” or “nameless” function.

From the elisp documentation:

(lambda ARGS BODY) Return a lambda expression. A call of the form (lambda ARGS BODY) is self-quoting; the result of evaluating the lambda expression is the expression itself. The lambda expression may then be treated as a function, i.e., stored as the function value of a symbol, passed to =funcall= or =mapcar=, etc. ARGS should take the same form as an argument list for a =defun=. BODY should be a list of Lisp expressions.

In a way, lambda is like let, only instead of a list of variable bindings

there is a list of just variables — the arguments to the function —

referenced by the expressions in the body.

This anonymous function form is useful for creating ad hoc functions that to give a name to would be rather a bother.

For example, the times-2 function defined above anonymizes as:

(lambda (n) (* 2 n))

Now the great secret of defun can be revealed. It is merely a convenience

method for creating a lambda expression!

(symbol-function 'times-2)

(lambda (n) (* 2 n))

So using defun to define a function has the exact effect of the following

(fset for “function set”) code that sets the symbol times-2’s function

“slot” (or cell) to its definition:

(fset 'times-2 (lambda (n) (* 2 n)))

For another example, let’s say we want a function whose definition is “if the

argument n is even return n halved, otherwise return one more than thrice n”.